NextBot: Difference between revisions

No edit summary |

(improved) |

||

| (30 intermediate revisions by 14 users not shown) | |||

| Line 1: | Line 1: | ||

{{LanguageBar}} | |||

{{toc|style=display:none;}} | |||

The NextBot system is | {{Capsule| | ||

== What Is A NextBot? == | |||

The {{Code|NextBot}} system is mainly used for creating and controlling in-game entitles that behave similarly to a player ''(commonly referred to as bots)''. Unlike [[NPC]]s NextBots were designed for in-game dynamic thinking and movement. | |||

{{Quote|As of February 2025, the code for the system is available under [[Source SDK 2013]].}} | |||

}} | |||

{{Capsule| | |||

=== How a NextBot works === | |||

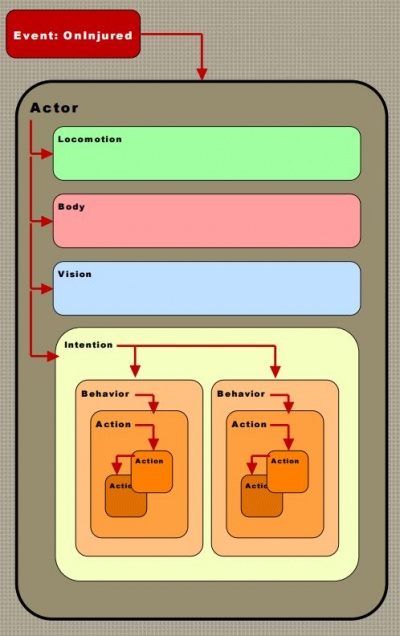

[[File:Nextbot_actor.JPG|thumb|400px|right|An example diagram of how an event affects a NextBot. This diagram is featured in the ''AI Systems of Left 4 Dead'' PDF.]] | |||

A NextBot uses an overall structure, known as an "Actor", to run through more specific factors. When an event occurs, such as the example diagram's {{Code|Oninjured}}, the Actor responds by changing these factors to reflect the event. Here is a summary of all the different factors a NextBot has: | |||

=== {{Tint|color=darkgreen|Locomotion}} === | |||

Handles how a NextBot moves around in its environment. | |||

{{Quote|For example, if a NextBot was programmed to flee after being injured, it would rely on this factor to move to a different position in the map.}} | |||

=== {{Tint|color=red|Body }} === | |||

Handles the animations of a NextBot. | |||

{{Quote|With the "oninjured" example, a NextBot would rely on this factor to play a flinching animation.}} | |||

=== {{Tint|color=blue|Vision}} === | |||

Handles how a NextBot sees certain entities in its environment. | |||

* functions like {{Code|field-of-view}} and {{Code|line-of-sight}}are located here. | |||

* Keep in mind that this factor is '''NOT''' required for NextBots to work. | |||

{{Quote|For example a skeleton in {{tf2|4}}, will find and attack enemies regardless of whether or not it sees them.}} | |||

=== {{Tint|color=yellow|Intention}} === | |||

The Intention factor manages the different behaviors a NextBot might have and is responsible for changing them. | |||

''{{Quote|For a look at how this factor works in-game, see [[Example_of_NextBot_Behaviors | Example of NextBot Behaviors]].}}'' | |||

=== {{Tint|color=orange|Behaviour}} === | |||

A Behavior contains a series of Actions, which it will perform when the Intention factor chooses it. | |||

{{Quote|This function can be considered to be the NextBot equivalent of a [[Schedule]] from [[NPC]]s}} | |||

=== | === {{Tint|color=orange|Action}} === | ||

This | This features the code for a NextBot, which will run when its parent {{Tint|color=orange|Behaviour}} is run by the {{Tint|color=yellow|Intention}} factor. | ||

* Actions can have an additional child Action, which will run at the same time as its parent Action. | |||

{{Quote|This function can be considered to be the NextBot equivalent of a [[Task]] from [[NPC]]s}} | |||

|style=2}} | |||

=== | {{Table | ||

| align = center | |||

| caption indent:top = 1em | |||

| caption = Valve-developed games which use the NextBot system | |||

| caption indent:bottom = 1em | |||

| {{tr | |||

| {{th|radius=3px 0 0 0| Game }} | |||

{{th|radius=0 3px 0 0| Example }} | |||

}} | |||

{{tr | |||

| {{td|bgcolor=#303030| {{l4d|4}} & {{l4d2|4}} }} | |||

{{td| Survivor bots & All Infected }} | |||

}} | |||

{{tr | |||

| {{td|bgcolor=#303030| {{tf2|4}} }} | |||

{{td| Local Bots, Mann Vs Machine Robots, Halloween Event Characters }} | |||

}} | |||

{{tr | |||

| {{td|bgcolor=#303030| {{dota2|4}} }} | |||

{{td| Hero Bots }} | |||

}} | |||

{{tr | |||

| {{td|colspan=2|bgcolor=#303030|radius=0 0 3px 0| Although {{css|4}} and {{csgo|4}} have bots, they use unique systems to determine behavior. }} | |||

}} | |||

}} | |||

{{Capsule| | |||

=== The differences between a nodegraph [[NPC]] and a NextBot === | |||

Although Source NPCs (such as those from {{hl2|4|}}) and NextBots are both used for [[AI]], it is important to know that the two systems are not one and the same. Here are a few key differences that set the systems apart: | |||

==== 1. NextBots use [[Nav Mesh|navigation meshes]] to move around, not [[nodegraph]]s. ==== | |||

Source NPCs use [[Node | nodes]] to determine not only where to navigate, but also what to do when they reach a specific node. NextBots do not use these nodes to navigate; instead they use the navigation mesh to move around and, depending on what mark a specific area may have, perform different actions. | |||

==== 2. The NextBot system can be applied to both bots and non-playable entities. ==== | |||

The inner mechanics of the Source NPC system only apply to entities which are not players. With the NextBot system, both playable and non-playable characters can be fitted with AI if needed. | |||

==== 3. The NextBot system is almost entirely built with ground-based entities in mind. ==== | |||

Source NPCs can be defined to either navigate on the ground or use [[Info_node_air | air nodes]] to fly around open spaces. Two NextBots ([[eyeball_boss|Monoculous]] and [[Merasmus]]) are capable of flight-based movement, but this is rather rudimentary, as the system currently doesn't have official support for "air navigation meshes" or any similar mechanic, with their only point of reference being the player(s). | |||

|style=2}} | |||

= | === Additional Resources === | ||

[[nb_debug]] | * [[nb_debug]] | ||

* [[Example_of_NextBot_Behaviors | Example of NextBot Behaviors]] | |||

[[ | === External links === | ||

* [https://steamcdn-a.akamaihd.net/apps/valve/2009/ai_systems_of_l4d_mike_booth.pdf The AI Systems of Left 4 Dead] - An official PDF regarding various mechanics of Left 4 Dead, including NextBots and the AI Director | |||

** [https://www.youtube.com/watch?v=PJNQl3K58CQ GDC Recorded Video of presentation] | |||

[[Category:Source]] | |||

[ | |||

[[Category:NextBot]] | [[Category:NextBot]] | ||

[[Category: | [[Category:Bots]] | ||

Latest revision as of 21:15, 18 July 2025

What Is A NextBot?

The NextBot system is mainly used for creating and controlling in-game entitles that behave similarly to a player (commonly referred to as bots). Unlike NPCs NextBots were designed for in-game dynamic thinking and movement.

How a NextBot works

A NextBot uses an overall structure, known as an "Actor", to run through more specific factors. When an event occurs, such as the example diagram's Oninjured, the Actor responds by changing these factors to reflect the event. Here is a summary of all the different factors a NextBot has:

Locomotion

Handles how a NextBot moves around in its environment.

Body

Handles the animations of a NextBot.

Vision

Handles how a NextBot sees certain entities in its environment.

- functions like

field-of-viewandline-of-sightare located here. - Keep in mind that this factor is NOT required for NextBots to work.

Intention

The Intention factor manages the different behaviors a NextBot might have and is responsible for changing them.

Behaviour

A Behavior contains a series of Actions, which it will perform when the Intention factor chooses it.

Action

This features the code for a NextBot, which will run when its parent Behaviour is run by the Intention factor.

- Actions can have an additional child Action, which will run at the same time as its parent Action.

| Game | Example |

|---|---|

| |

Survivor bots & All Infected |

| |

Local Bots, Mann Vs Machine Robots, Halloween Event Characters |

| |

Hero Bots |

| Although |

|

The differences between a nodegraph NPC and a NextBot

Although Source NPCs (such as those from ![]() Half-Life 2) and NextBots are both used for AI, it is important to know that the two systems are not one and the same. Here are a few key differences that set the systems apart:

Half-Life 2) and NextBots are both used for AI, it is important to know that the two systems are not one and the same. Here are a few key differences that set the systems apart:

Source NPCs use nodes to determine not only where to navigate, but also what to do when they reach a specific node. NextBots do not use these nodes to navigate; instead they use the navigation mesh to move around and, depending on what mark a specific area may have, perform different actions.

2. The NextBot system can be applied to both bots and non-playable entities.

The inner mechanics of the Source NPC system only apply to entities which are not players. With the NextBot system, both playable and non-playable characters can be fitted with AI if needed.

3. The NextBot system is almost entirely built with ground-based entities in mind.

Source NPCs can be defined to either navigate on the ground or use air nodes to fly around open spaces. Two NextBots (Monoculous and Merasmus) are capable of flight-based movement, but this is rather rudimentary, as the system currently doesn't have official support for "air navigation meshes" or any similar mechanic, with their only point of reference being the player(s).

Additional Resources

External links

- The AI Systems of Left 4 Dead - An official PDF regarding various mechanics of Left 4 Dead, including NextBots and the AI Director